What is DataOps?

DataOps is the use of agile development practices to create, deliver, and optimize data products, quickly and cost-effectively. Data products include data pipelines, data-dependent apps, dashboards, machine learning models, other AI-powered software, and even answers to ad hoc SQL queries. DataOps is practiced by modern data teams, including data engineers, architects, analysts, scientists and operations.

What are Data Products?

A data product is any tool or application that processes data and generates results. The primary objective of data products is to manage, organize, and make sense of the large amount of data that an organization generates and collects.

What is a DataOps Platform?

DataOps is more of a methodology than a specific, discrete platform. However, a platform that supports multiple aspects of DataOps practices can assist in the adoption and effectiveness of DataOps.

Every organization today is in the process of harnessing the power of their data using advanced analytics, which is likely running on a modern data stack. On top of the data stack, organizations create “data products.”

These data products range from advanced analytics, data pipelines, and machine learning models to embedded AI solutions. All of these work together to help organizations gain insights, make decisions,and power applications. In order to extract the maximum value out of these data products, companies employ a DataOps methodology that allows them to efficiently extract value out of their data.

What does DataOps do for an organization?

DataOps is a set of agile-based development practices that make it faster, easier, and less costly to develop, deploy, and optimize data-powered applications.

Using an agile approach, an organization identifies a problem to solve, and then breaks it down into smaller pieces. Each piece is then assigned to a team that breaks down the work to solve the problem into a defined set of time – usually called a sprint – that includes planning, work, deployment, and review.

Who benefits from DataOps?

DataOps is for organizations that want to not only succeed, but to outpace the competition. With DataOps, an organization is continually striving to create better ways to manage their data, which should lead to being able to use this data to make decisions that help them.

The practice of DataOps can benefit organizations by fostering cross-matrix collaboration between teams of data scientists, data engineers, data analysts, operations, and product owners. Each of these roles needs to be in sync in order to use the data in the most efficient manner, and DataOps strives to accomplish this.

Research by Forrester indicates that companies that embed analytics and data science into their operating models to bring actionable knowledge into every decision are at least twice as likely to be in a market-leading position than their industry peers.

10 Steps of the DataOps Lifecycle

DataOps is not limited to making existing data pipelines work effectively, getting reports and artificial intelligence and machine learning outputs and inputs to appear as needed, and so on. DataOps actually includes all parts of the data management lifecycle.

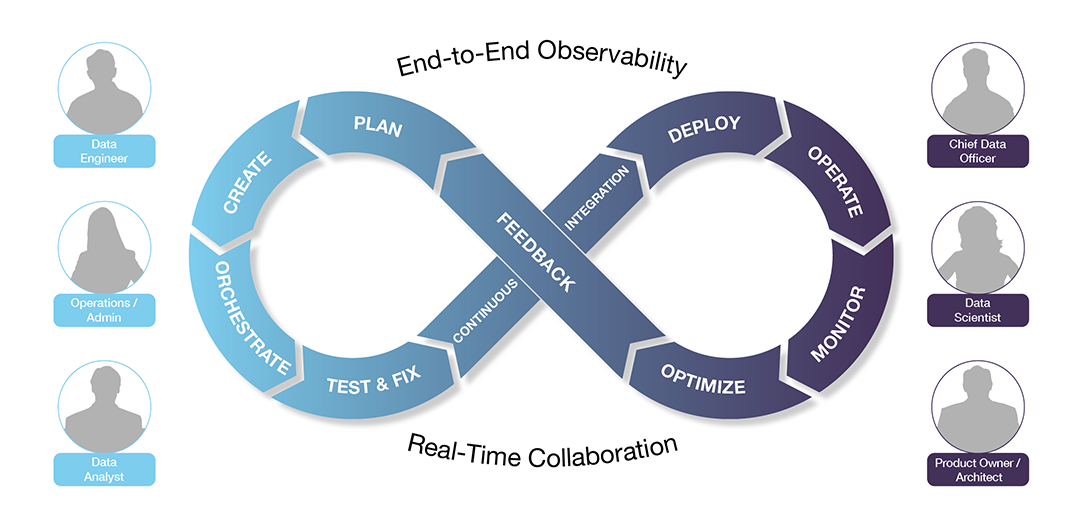

The DataOps lifecycle shown above takes data teams on a journey from raw data to insights. Where possible, DataOps stages are automated to accelerate time to value. The steps below show the full lifecycle of a data-driven application.

- Plan. Define how a business problem can be solved using data analytics. Identify the needed sources of data and the processing and analytics steps that will be required to solve the problem. Then select the right technologies, along with the delivery platform, and specify available budget and performance requirements.

- Create. Create the data pipelines and application code that will ingest, transform, and analyze the data. Based on the desired outcome, data applications are written using SQL, Scala, Python, R, or Java, among others.

- Orchestrate. Connect stages needed to work together to produce the desired result. Schedule code execution, with reference to when the results are needed; when cost-effective processing is most available; and when related jobs (inputs and outputs, or steps in a pipeline) are running.

- Test & Fix. Simulate the process of running the code against the data sources in a sandbox environment. Identify and remove any bottlenecks in data pipelines. Verify results for correctness, quality, performance, and efficiency.

- Continuous Integration. Verify that the revised code meets established criteria to be promoted into production. Integrate the latest, tested and verified code and data sources incrementally, to speed improvements and reduce risk.

- Deploy. Select the best scheduling window for job execution based on SLAs and budget. Verify that the changes are an improvement; if not, roll them back, and revise.

- Operate. Code runs against data, solving the business problem, and stakeholder feedback is solicited. Detect and fix deviations in performance to ensure that SLAs are met.

- Monitor. Observe the full stack, including data pipelines and code execution, end-to-end. Data operators and engineers use tools to observe the progress of code running against data in a busy environment, solving problems as they arise.

- Optimize. Constantly improve the performance, quality, cost, and business outcomes of data applications and pipelines. Team members work together to optimize the application’s resource usage and improve its performance and effectiveness.

- Feedback. The team gathers feedback from all stakeholders – the data team itself, app users, and line of business owners. The team compares results to business success criteria and delivers input to the Plan phase.

There are two overarching characteristics of DataOps that apply to every stage in the DataOps lifecycle: end-to-end observability and real-time collaboration.

End-to-End Observability

End-to-end observability is key to delivering high-quality data products, on time and under budget. You need to be able to measure key KPIs about your data-driven applications, the data sets they process, and the resources they consume. Key metrics include application / pipeline latency, SLA score, error rate, result correctness, cost of run, resource usage, data quality, and data usage.

You need this visibility horizontally – across every stage and service of the data pipeline – and vertically, to see whether it is the application code, service, container, data set, infrastructure or another layer that is experiencing problems. End-to-end observability provides a single, trusted “source of truth” for data teams and data product users to collaborate around.

Real-Time Collaboration

Real-time collaboration is crucial to agile techniques; dividing work into short sprints, for instance, provides a work rhythm across teams. The DataOps lifecycle helps teams identify where in the loop they’re working, and to reach out to other stages as needed to solve problems – both in the moment, and for the long term.

Real-time collaboration requires open discussion of results as they occur. The observability platform provides a single source of truth that grounds every discussion in shared facts. Only through real-time collaboration can a relatively small team have an outsized impact on the daily and long-term delivery of high-quality data products.

Conclusion

Through the use of a DataOps approach to their work, and careful attention to each step in the DataOps lifecycle, data teams can improve their productivity and the quality of the results they deliver to the organization.

As the ability to deliver predictable and reliable business value from data assets increases, the business as a whole will be able to make more and better use of data in decision-making, product development, and service delivery.

Advanced technologies, such as artificial intelligence and machine learning, can be implemented faster and with better results, leading to competitive differentiation and, in many cases, industry leadership.