Unravel’s purpose-built AI for Databricks unlocks performance and efficiency needed by data teams to achieve speed and scale for data analytics and AI products. Unravel’s deep observability at the job, cluster, user, and code level enables AI-powered insights to launch data applications faster, scale efficiently, increase accuracy of lakehouse capacity forecasts, achieve SLAs, and more. Unravel helps Databricks users speed up lakehouse adoption by providing them with instant visibility into costs, accurate predictions of spending, and performance recommendations to accelerate their data pipelines and applications.

View Plans & Pricing as well as Get Started for Free

Unravel’s AI-powered Insights Engine comprehends the depth and breadth of the Databricks ecosystem, helping you achieve speed and scale on the lakehouse.

CLOUD COST MANAGEMENT & FINOPS SELF-GUIDED TOURS

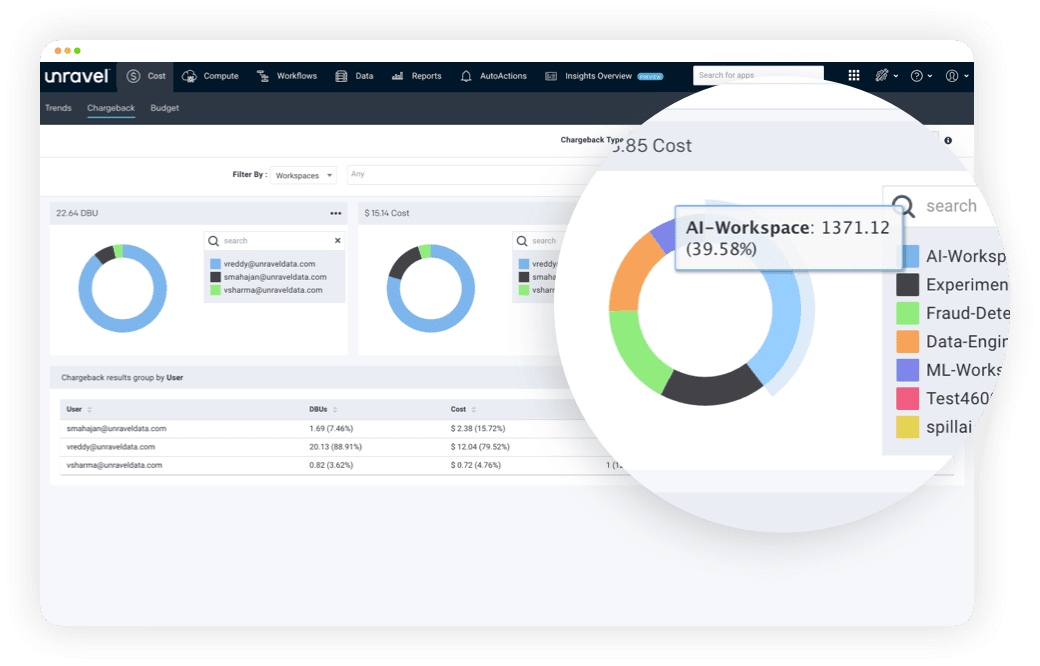

Assign cost with business context. Track overages. Get AI-driven cost-saving recommendations.

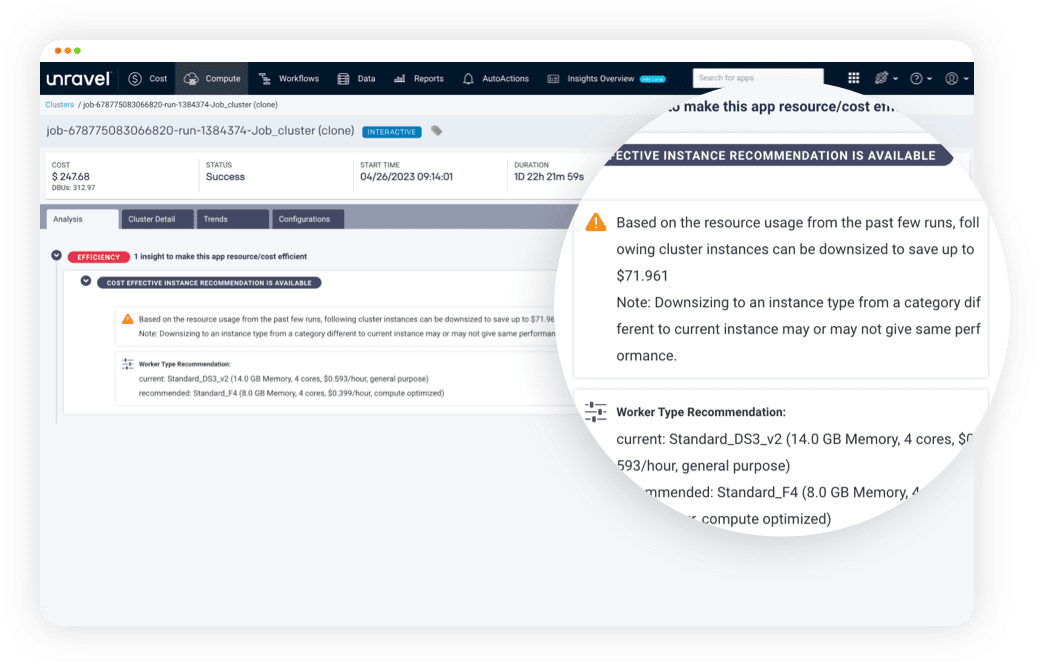

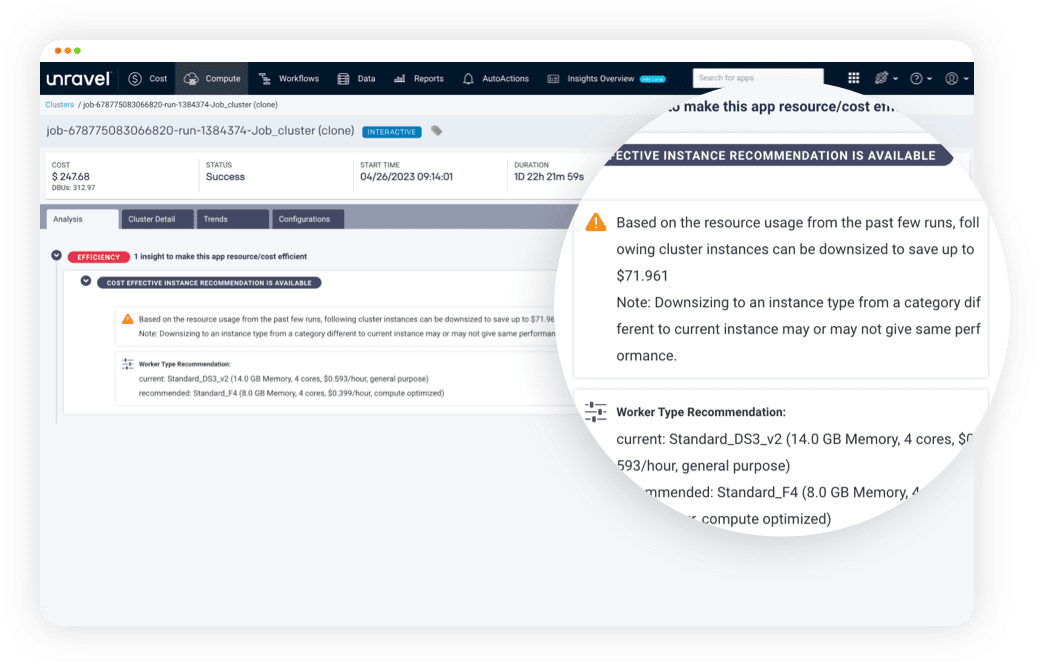

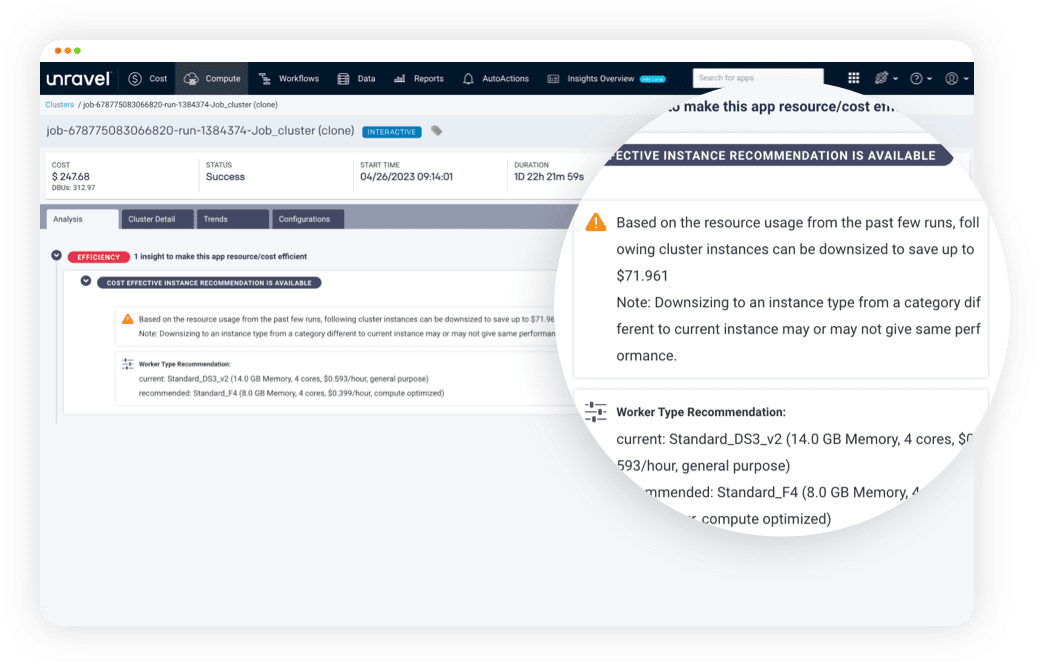

Quickly reduce spending at the cluster level with AI-driven cost-saving recommendations.

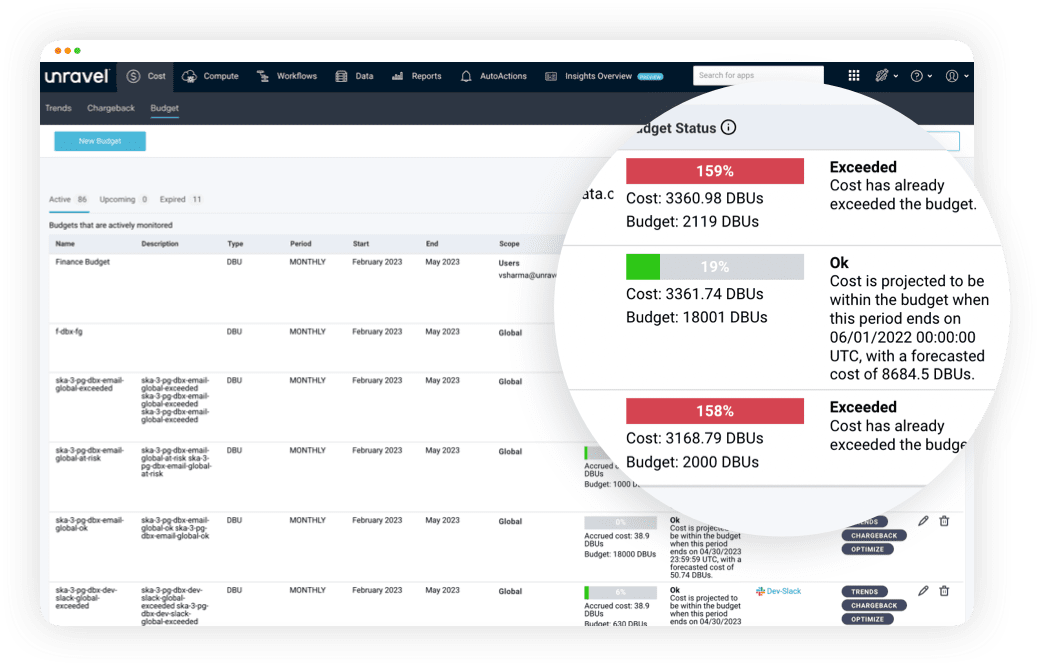

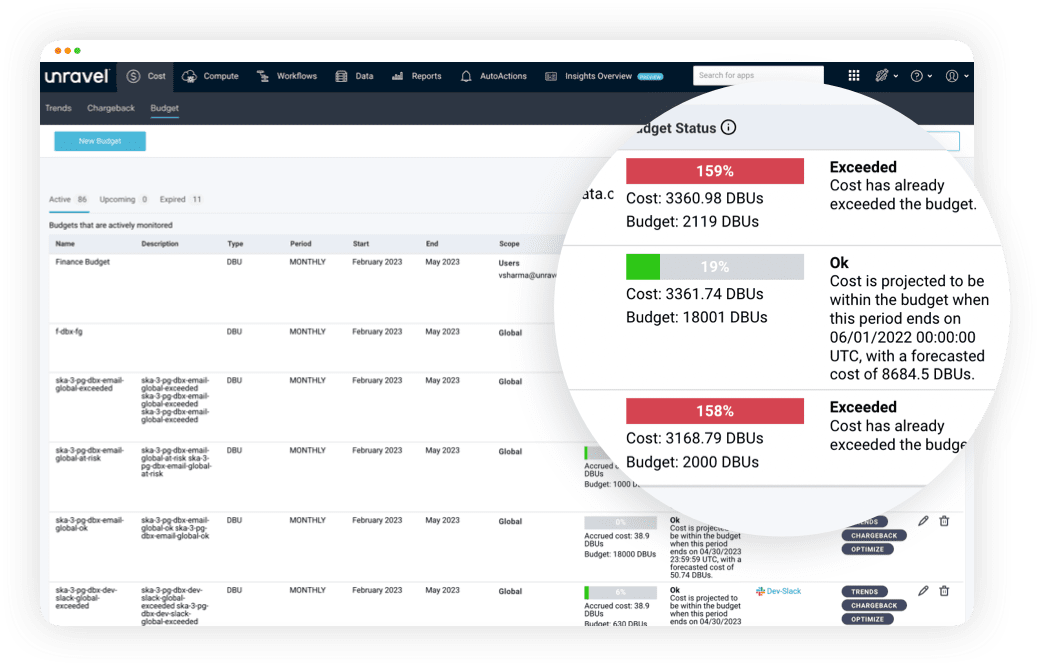

Track budgets vs. actual spending at a granular level and optimize costs with AI-driven recommendations.

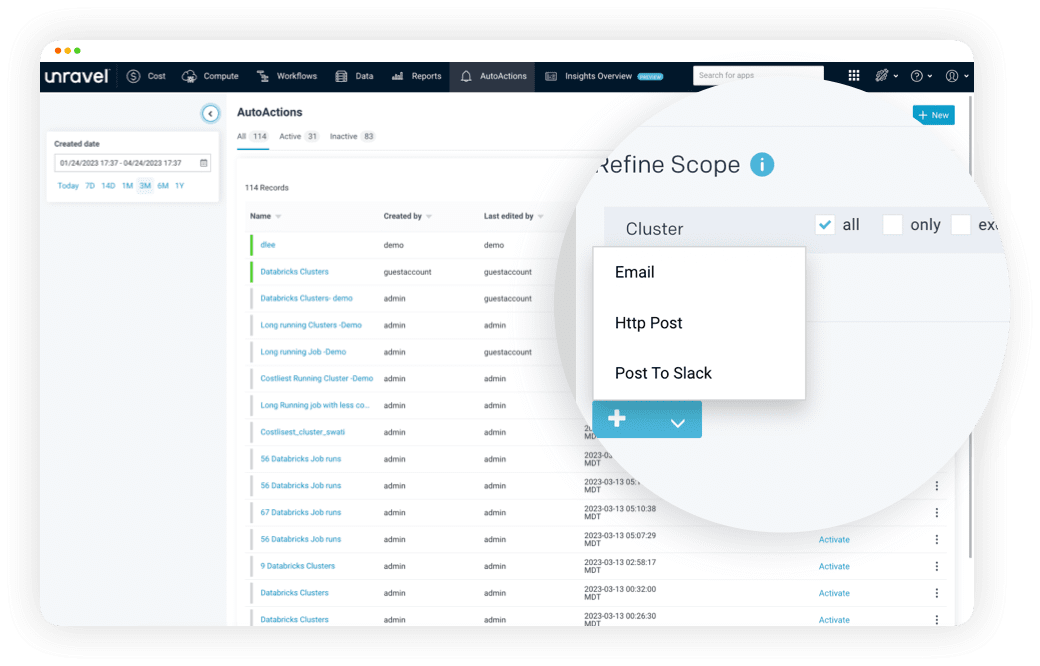

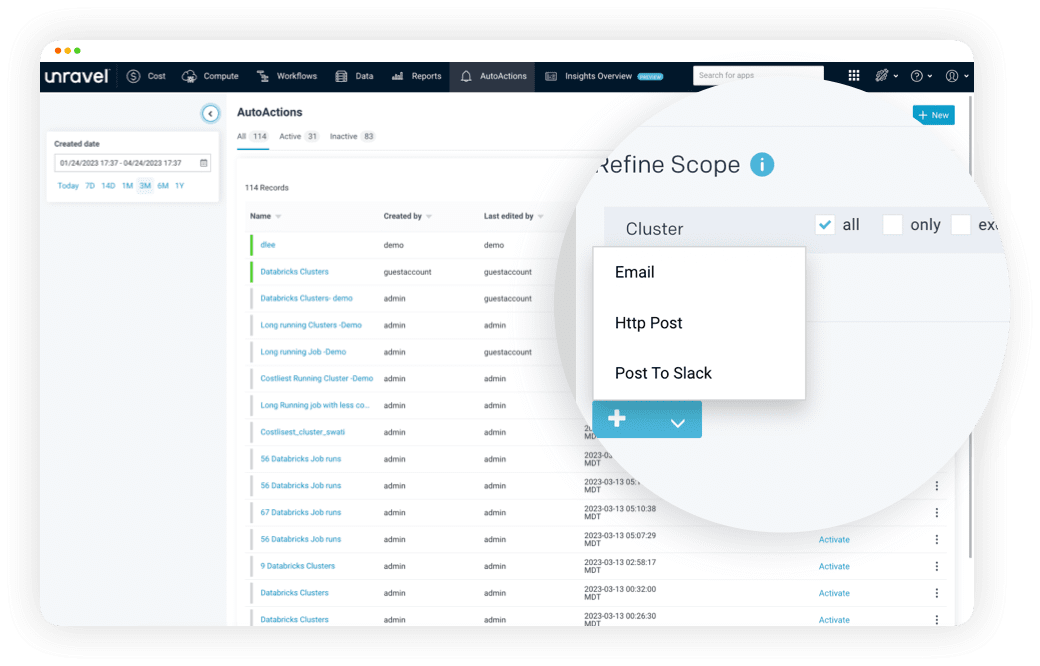

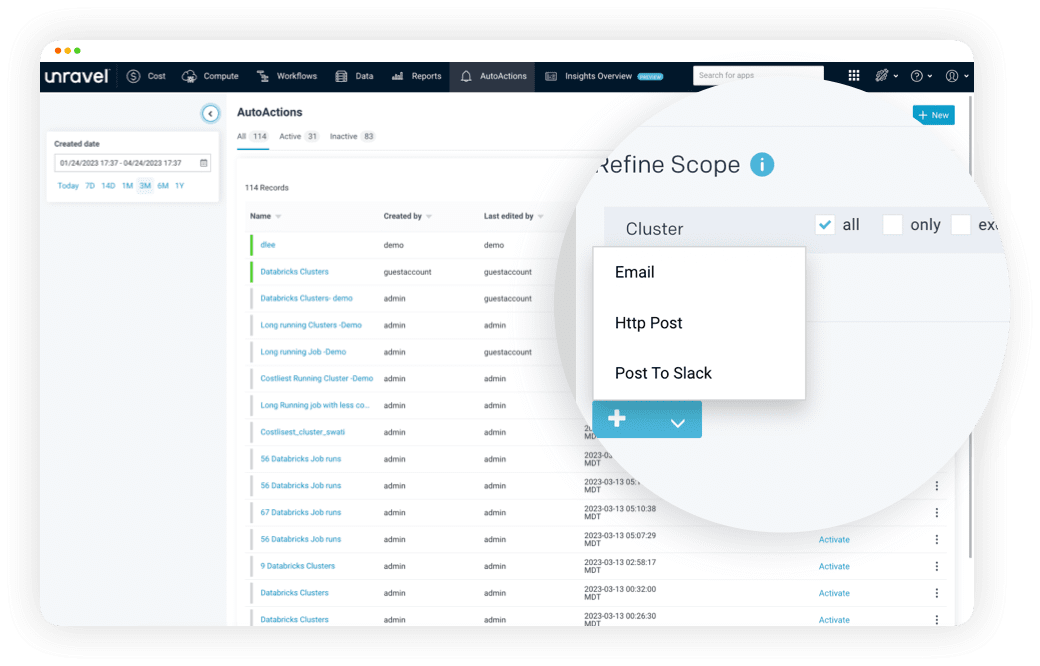

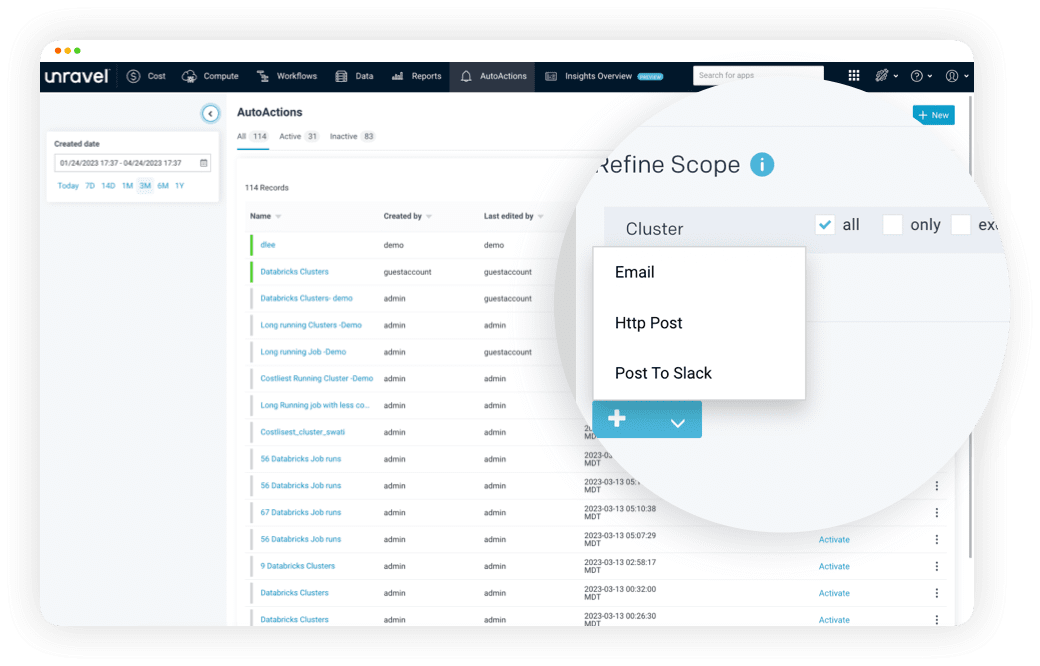

Create guardrails and keep budgets on track with automated oversight and real-time notifications.

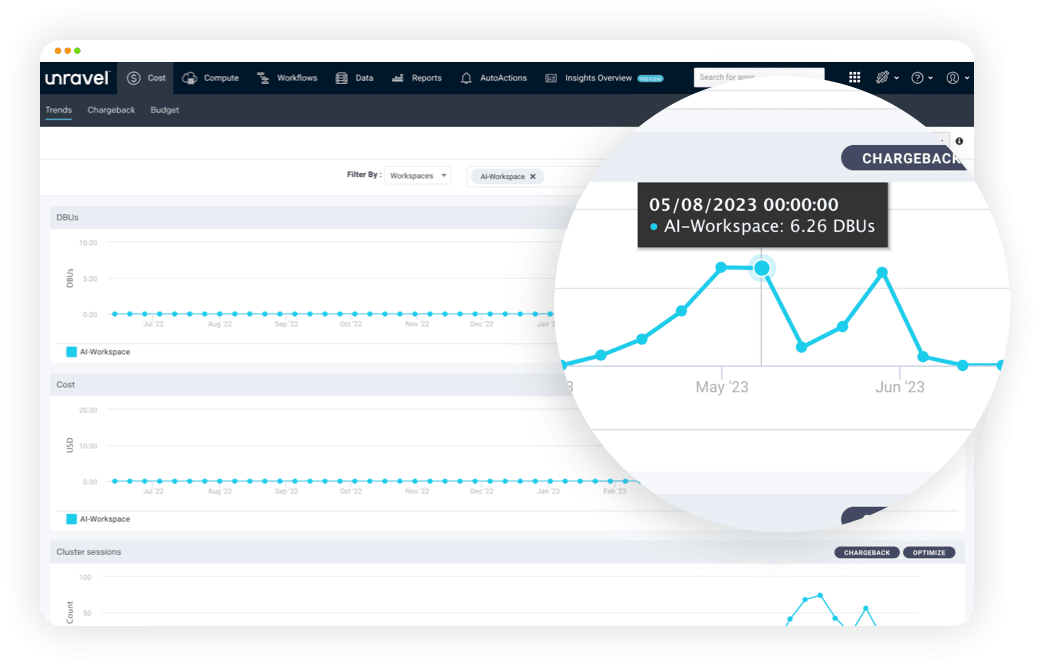

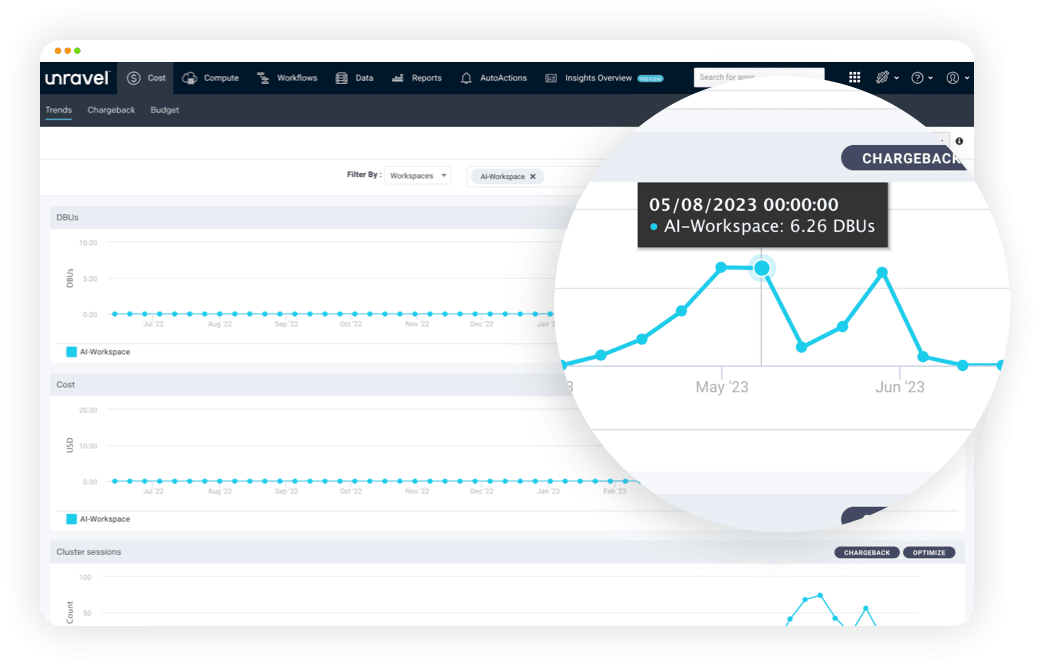

Accurately forecast cloud data spending with a data-driven view of consumption trends.

Assign cost with business context. Track overages. Get AI-driven cost-saving recommendations.

Quickly reduce spending at the cluster level with AI-driven cost-saving recommendations.

Track budgets vs. actual spending at a granular level and optimize costs with AI-driven recommendations.

Create guardrails and keep budgets on track with automated oversight and real-time notifications.

Accurately forecast cloud data spending with a data-driven view of consumption trends.

OPERATIONS & TROUBLESHOOTING SELF-GUIDED TOURS

Quickly reduce spending at the cluster level with AI-driven cost-saving recommendations.

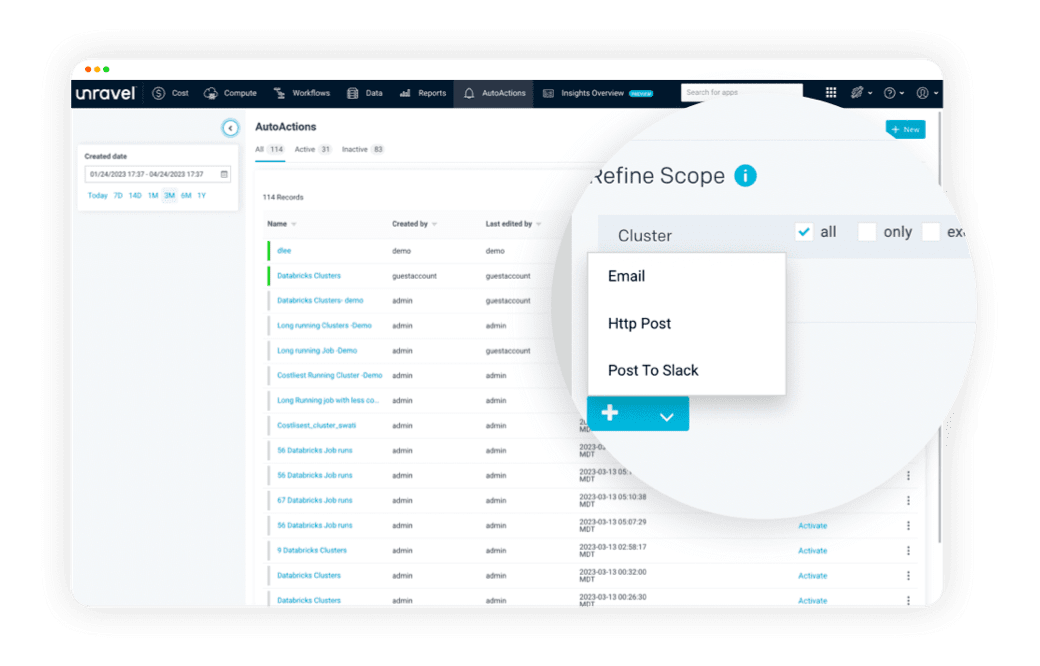

Automate operations by creating policies, alerts and actions.

Quickly reduce spending at the cluster level with AI-driven cost-saving recommendations.

Automate operations by creating policies, alerts and actions.

PIPELINE & APP OPTIMIZATION SELF-GUIDED TOURS

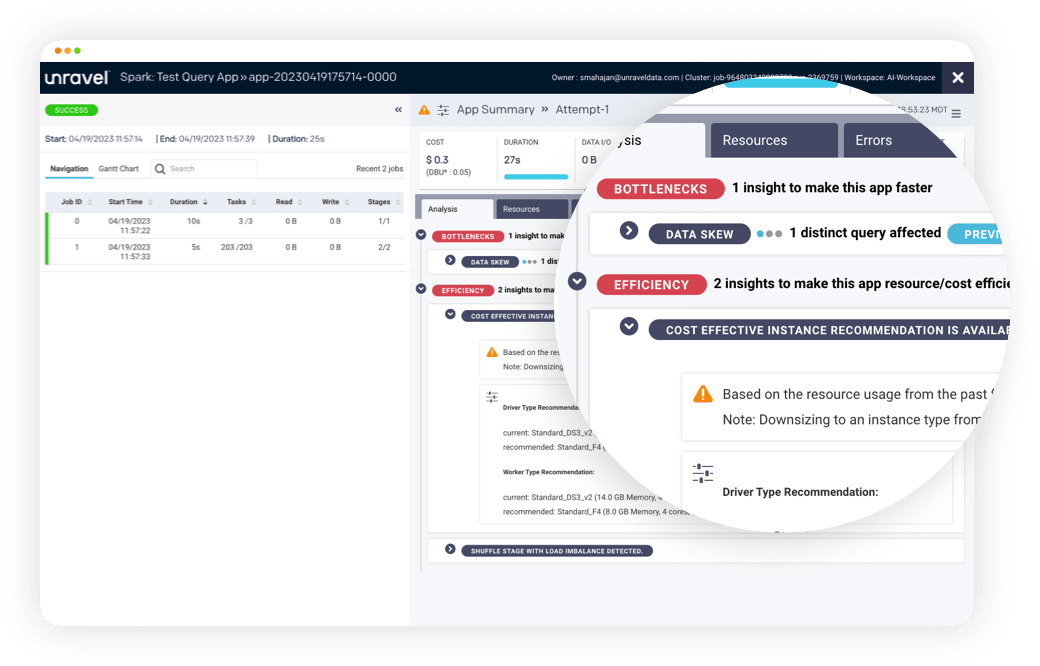

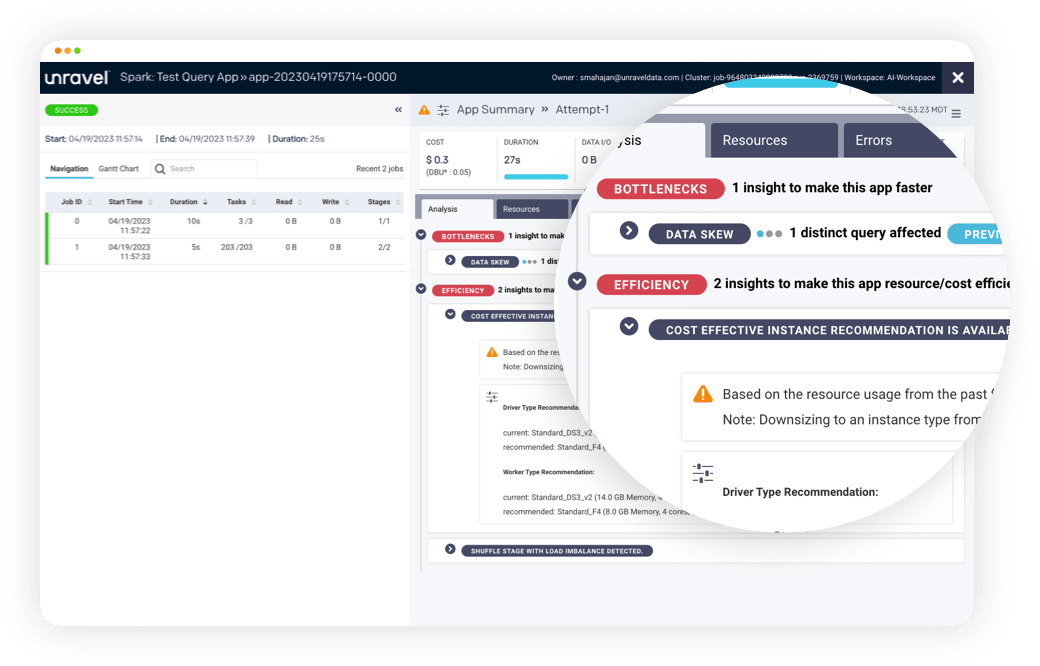

Quickly tune application performance and/or reduce costs with AI recommendations.

Quickly pinpoint where pipelines have bottlenecks and why they're missing SLAs.

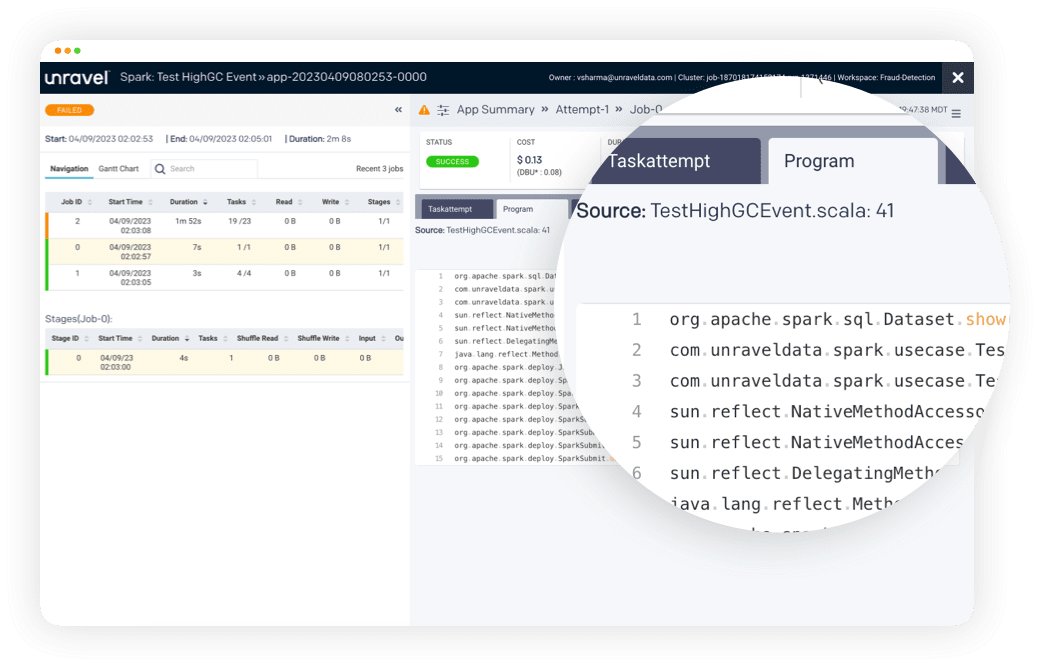

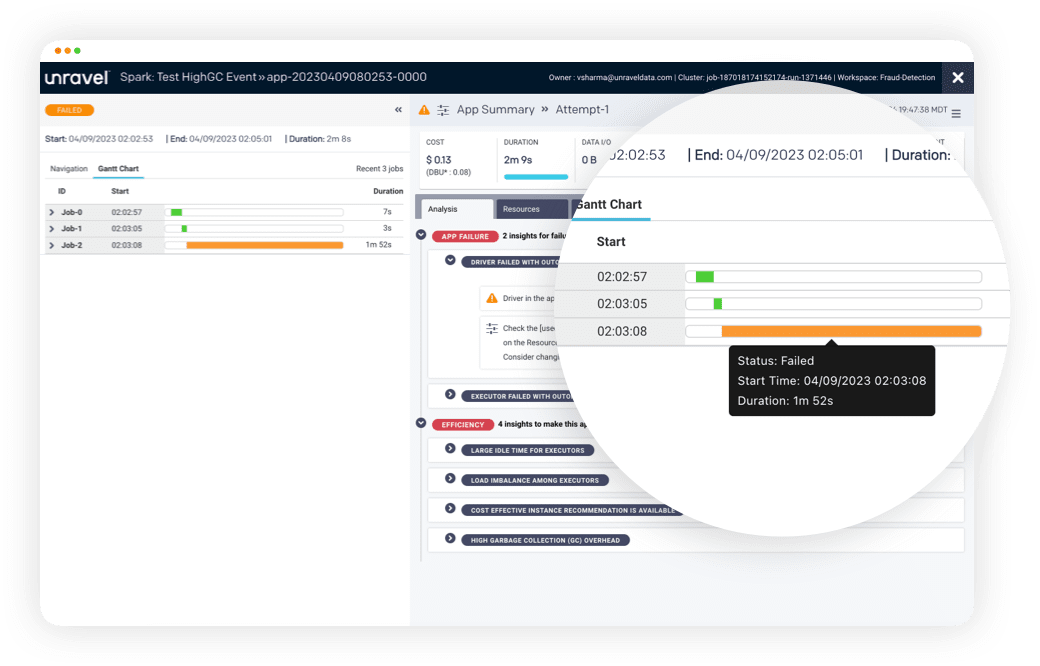

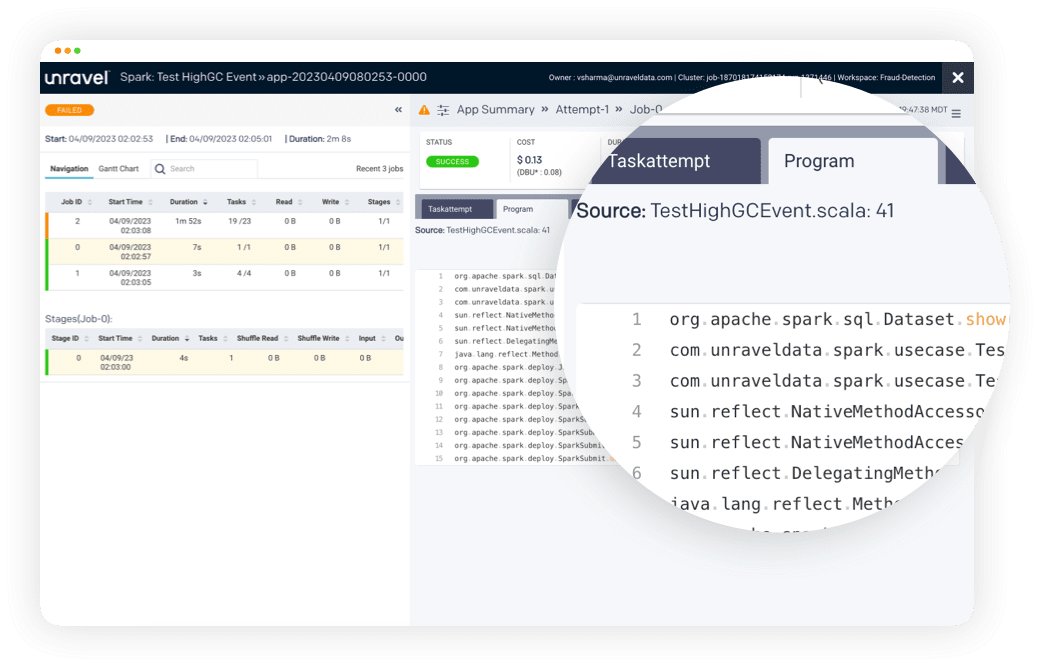

Quickly pinpoint exactly where, and why, application code is inefficient or failing in one easy-to-read view.

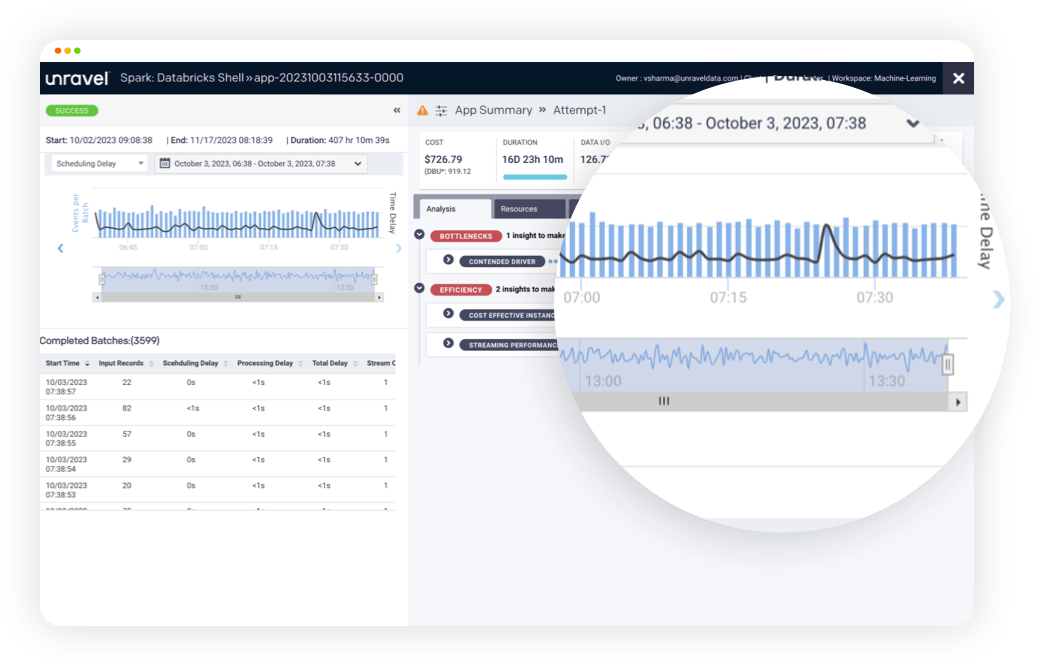

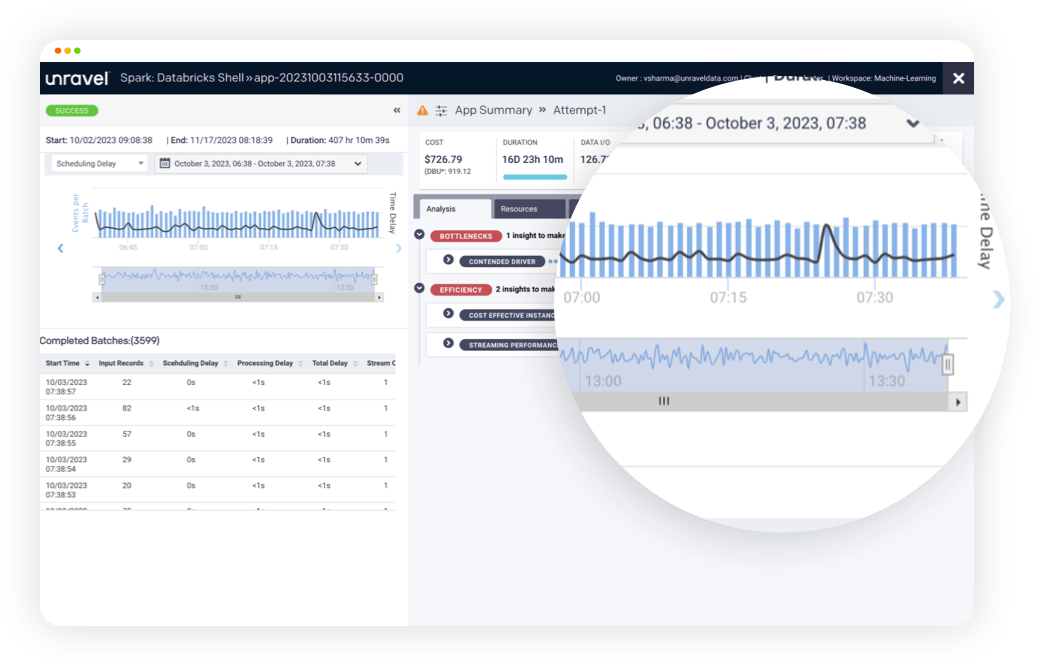

Keep your machine learning apps running smoothly using Unravel's AI-enabled observability.

Quickly tune application performance and/or reduce costs with AI recommendations.

Quickly pinpoint where pipelines have bottlenecks and why they're missing SLAs.

Quickly pinpoint exactly where, and why, application code is inefficient or failing in one easy-to-read view.

Keep your machine learning apps running smoothly using Unravel's AI-enabled observability.

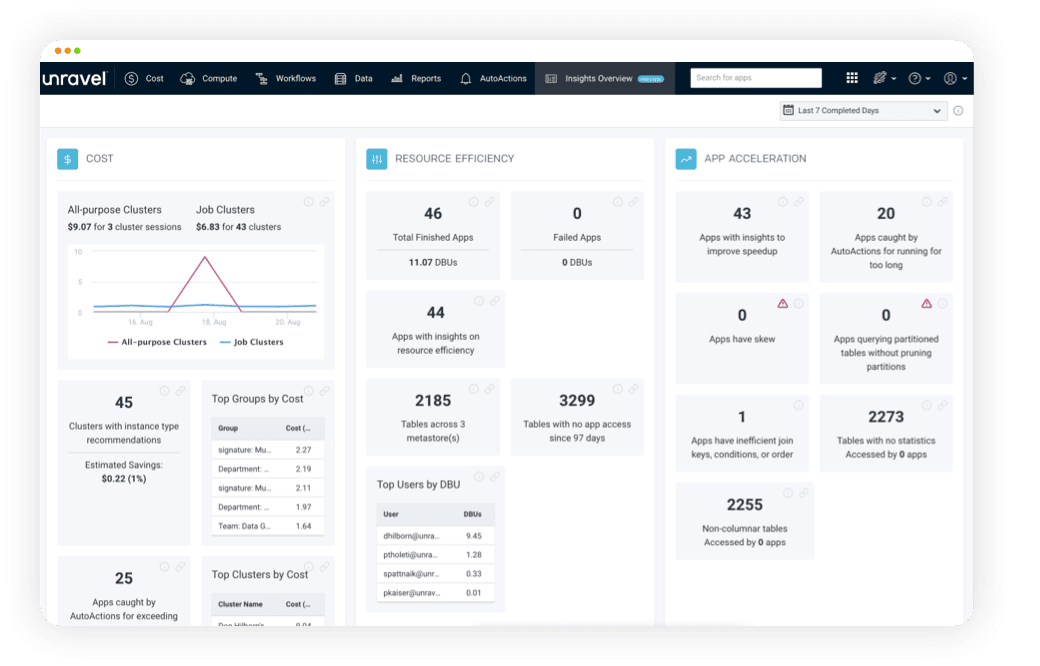

Unravel for Databricks provides a single source of truth to improve collaboration across functional teams and accelerates workflows for common use cases. Below are just a few examples of how Unravel helps Databricks users for specific situations:

Scenario: Understand what we pay for Databricks down to the user/app level in real time, accurately forecast future spend with confidence.

Benefits: Granular visibility at the workspace, cluster, job, and user level enables FinOps practitioners to perform cost allocation, estimate annual cloud data application costs, cost drivers, break-even, and ROI analysis.

Scenario: Identify the most impactful recommendations to optimize overall cost and performance.

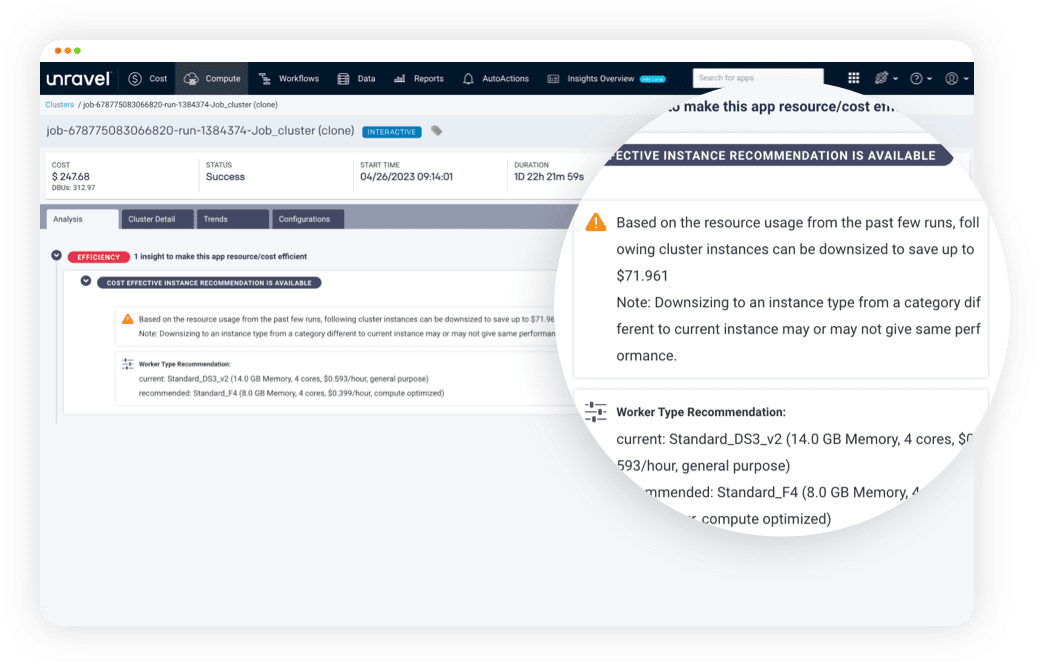

Benefits: AI-powered performance and cost optimization recommendations enable FinOps and data teams to rapidly upskill team members, implement cost efficiency SLAs, and optimize Databricks DBU and cloud infrastructure usage to maximize the company’s cloud data ROI.

Scenario: Identify the most impactful recommendations to optimize the cost and performance of a Databricks job.

Benefits: AI-driven insights and recommendations enable product and data teams to improve DBU and infrastructure usage, boost Python, Java, Scala, and SQL query performance, leverage partitioning to achieve cost efficiency SLAs, and launch more data jobs within the same project budget.

Scenario: Live monitoring with alerts.

Benefits: Live monitoring with alerts speeds MTTR and prevents outages before they happen.

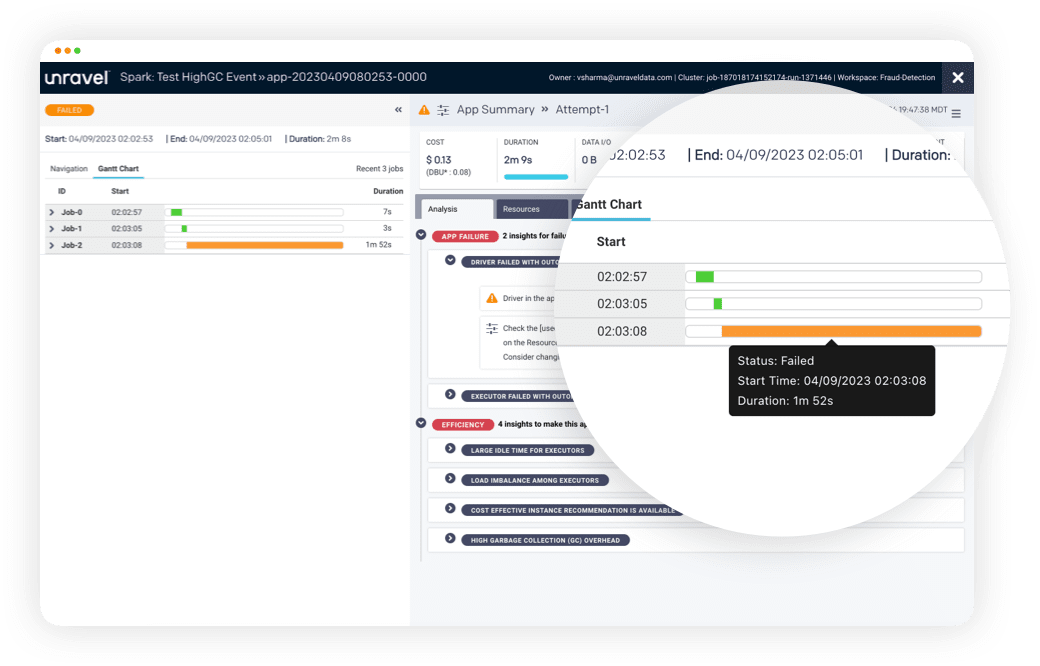

Scenario: Debugging a job and comparing jobs.

Benefits: Automatic troubleshooting guides data teams directly to pinpoint the source of job failures down to the line of code or SQL query, along with AI recommendations to fix it and prevent future issues.

Scenario: Identify expensive, inefficient, or failed jobs.

Benefits: Proactively improve cost efficiency, performance, and reliability before deploying jobs into production.

No. Databricks Units (DBUs) are reference units of Databricks Lakehouse Platform capacity used to price and compare data workloads. DBU consumption depends on the underlying compute resources and the data volume processed. Cloud resources such as compute instances and cloud storage are priced separately. Databricks pricing is available for Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). You can estimate costs online for Databricks on AWS, Azure Databricks, and Databricks on Google Cloud, then add estimated cloud compute and storage costs with the AWS Pricing Calculator, the Azure pricing calculator, and the Google Cloud pricing calculator.

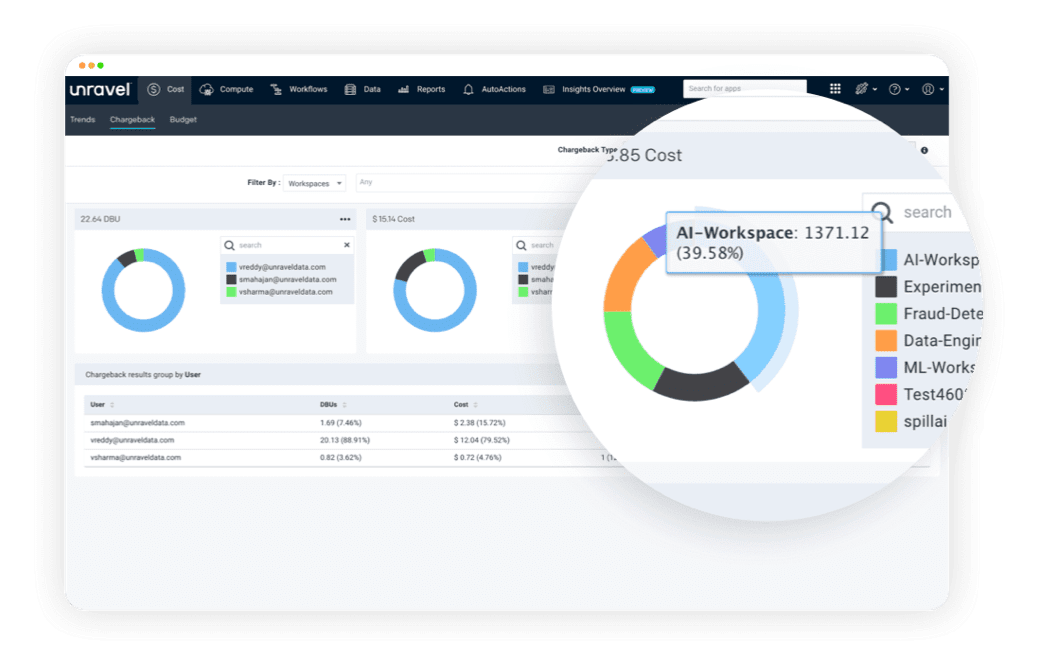

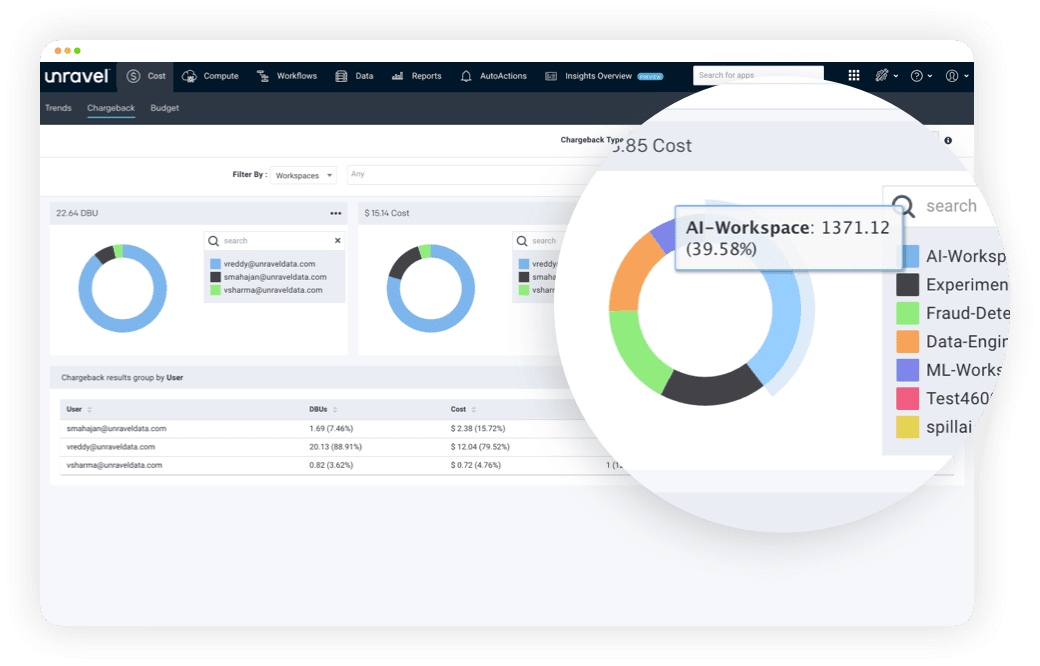

Cost 360 for Databricks provides trends and chargeback by app, user, department, project, business unit, queue, cluster, or instance. You can see a cost breakdown for Databricks clusters in real time, including related services such as DBUs and VMs for each configured Databricks account on the Databricks Cost Chargeback details tab. In addition, you get a holistic view of your cluster, including resource utilization, chargeback, and instance health, with automated AI-based cluster cost-saving recommendations and suggestions.

Databricks offers general tips and settings for certain scenarios, for example, auto optimize to compact small files. Unravel provides recommendations, efficiency insights, and tuning suggestions on the Applications page and the Jobs tab. With a single Unravel instance, you can monitor all your clusters, across all instances, and workspaces in Databricks to speed up your applications, improve your resource utilization, and identify and resolve application problems.

Real-time monitoring and alerting with Databricks Overwatch requires a time-series database. Databricks refreshes your billable usage data about every 24 hours and AWS Cost and Usage Reports are updated once a day in comma-separated value (CSV) format. Since Cost Explorer includes usage and costs of other services, you should tag your Databricks resources and you may consider creating custom tags to get granular reporting on your Databricks cluster resource usage. Unravel simplifies this process with Cost 360 for Databricks to provide full cost observability, budgeting, forecasting, and optimization in near real time. Cost 360 includes granular details about the user, team, data workload, usage type, data job, data application, compute, and resources consumed to execute each data application. In addition, Cost 360 provides insights and recommendations to optimize clusters and jobs as well as estimated cost improvements to prioritize workload optimization.

At the FinOps Crawl phase, the data will be less granular. You may start at the cloud provider and service level. In the Walk phase, you’ll want additional granularity to go into the application level. If FinOps data is only available at the cluster or infrastructure level, you may face challenges with allocation. In the Run phase, you may need to get to the user, project, and job level.

Data teams spend most of their time preparing data—data aggregation, cleansing, deduplication, synchronizing and standardizing data, ensuring data quality, timeliness, and accuracy, etc.—rather than actually delivering insights from analytics. Everybody needs to be working off a “single source of truth” to break down silos, enable collaboration, eliminate finger-pointing, and empower more self-service. Although the goal is to prevent data quality issues, assessing and improving data quality typically begins with monitoring and observability, detecting anomalies, and analyzing root causes of those anomalies.

Databricks collects monitoring and operational data in the form of logs, metrics, and events for your Databricks job flows. Databricks metrics can be used to detect basic conditions such as idle clusters and nodes or clusters that run out of storage. Troubleshooting slow clusters and failed jobs involves a number of steps such as gathering data and digging into log files. Data application performance tuning, root cause analysis, usage forecasting, and data quality checks require additional tools and data sources. Unravel accelerates the troubleshooting process by creating a data model using metadata from your applications, clusters, resources, users, and configuration settings, then applying predictive analytics and machine learning to provide recommendations and automatically tune your Databricks clusters.

Virtual Private Cloud (VPC) peering enables you to create a network connection between Databricks clusters and your AWS resources, even across regions, enabling you to route traffic between them using private IP addresses. For example, if you are running both an Unravel EC2 instance and a Databricks cluster in the us-east-1 region but configured with different VPC and subnet, there is no network access between the Unravel EC2 instance and Databricks cluster by default. To enable network access, you can set up VPC peering to connect Databricks to your EC2 Unravel instance.

Unravel provides granular Insights, recommendations, and automation for before, during and after your Spark, Hadoop and data migration to Databricks.

Get granular chargeback and cost optimization for your Databricks workloads. Unravel for Databricks is a complete data observability platform to help you tune, troubleshoot, cost-optimize, and ensure data quality on Databricks. Unravel provides AI-powered recommendations and automated actions to enable intelligent optimization of big data pipelines and applications.

Many enterprises are facing this exact situation, experiencing performance and cost issues migrating on-premises workloads to Databricks. On-prem workloads are naturally limited in terms of cost due to the physical constraints of their data center, but costs can suddenly grow on the cloud. Enterprises who have successfully migrated to Databricks start by optimizing jobs before moving them to the cloud to ensure they can predict the spend and performance.

Azure Databricks offers optimization suggestions and troubleshooting tips for certain scenarios, for example, auto optimize to compact small files. Unravel provides recommendations, efficiency insights, and tuning suggestions on the Applications page and the Jobs tab. With a single Unravel instance, you can monitor all your clusters, across all instances, and workspaces in Azure Databricks to speed up your applications, improve your resource utilization, and identify and resolve application problems.

Azure Databricks collects monitoring and operational data in the form of logs, metrics, and monitoring for your Azure Databricks job flows. Azure Databricks metrics can be used to detect basic conditions such as idle clusters and nodes or clusters that run out of storage. Troubleshooting slow clusters and failed jobs involves a number of steps such as gathering data and digging into log files. Data application performance tuning, root cause analysis, usage forecasting, and data quality checks require additional tools and data sources. Unravel accelerates the troubleshooting process by creating a data model using metadata from your applications, clusters, resources, users, and configuration settings, then applying predictive analytics and machine learning to provide recommendations and automatically tune your Azure Databricks clusters.

Virtual network (VNET) peering enables you to create a network connection between Azure Databricks clusters and your Azure resources, even across regions, enabling you to route traffic between them using private IP addresses. For example, if you are running both an Unravel VM and Azure Databricks cluster in the East US region but configured with different VNET and subnet, there is no network access between the Unravel VM and Databricks cluster by default. To enable network access, you can set up VNET peering between your Azure Databricks master node and your Unravel VM.