Over the last one year, we at Unravel Data have spoken to several enterprise customers (with cluster sizes ranging from 10’s to 1000’s of nodes) about their modern data stack application challenges. Most of them leverage Hadoop in a typical multi-tenant fashion wherein they have different applications (ETL, Business Intelligence, Machine Learning Apps etc.) accessing the common data substrate.

The conversations have typically been with both the Operations team, who manage and maintain these platforms and Developers/End Users, who are building these applications on the modern data stack (Hive, Spark, MR, Oozie etc.).

Broadly speaking, the challenges can be grouped from the application perspective and cluster perspective. We have highlighted these challenges with actual examples that we have discussed with these customers.

Challenges faced by Developers/Data Engineers

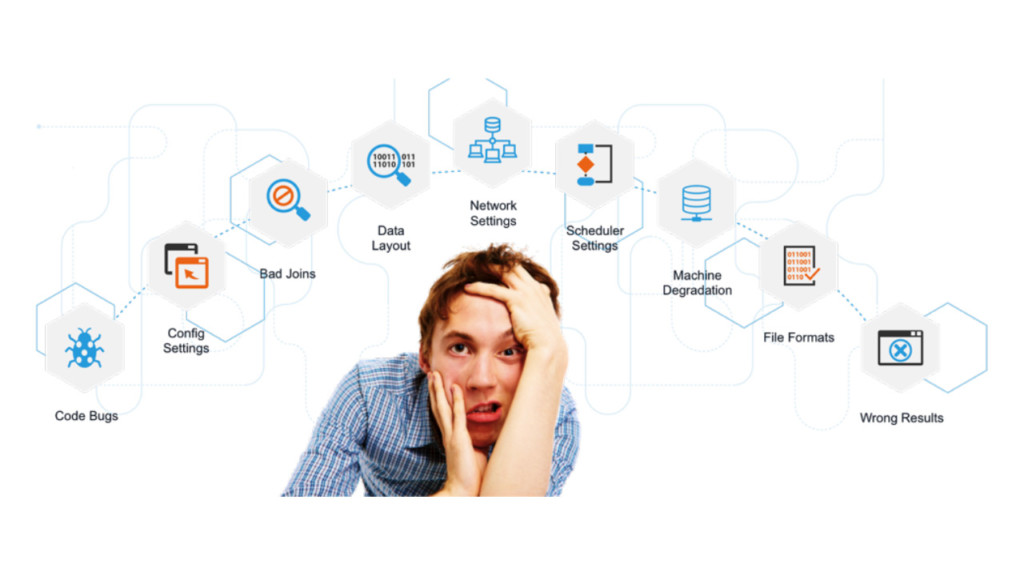

Some of the most common Application challenges we have seen or heard of that are top of mind include:

- An ad-hoc Application (submitted via Hive, Spark, MR) is stuck (not making forward progress) or fails after a while

Example 1: Spark job gets hung at the last task and eventually fails

Example 2: Spark job fails with executor OOM at a particular stage - Application is performing poorly suddenly

Example 1: A hive query that used to take ~6 hrs is now taking > 10 hrs - Not a good understanding on what “knobs” (configuration parameters) to change to improve application performance and resource usage

- Need a self-serve platform to understand end-to-end how their specific application(s) is behaving.

Today, engineers end up going to five different sources (e.g CM/Ambari UI, Job History UI, Application Logs, Spark WebUI, AM/RM UI/Logs) to get an end to end understanding of application behavior and performance challenges. Further, these sources maybe insufficient to truly understand the bottlenecks associated with an applications (e.g detailed container execution profiles, visibility into the transformations that execute within a Spark stage etc.). See an example here on how to debug a Spark application. It’s cumbersome!

To add to the above challenges, many developers do not have access to the above sources and have to go via their Ops team, which adds to significant delays.

Challenges faced by Data Operations Team(s)

Some of the most common challenges we have seen or heard of that are top of mind for include:

- Lack of visibility of Cluster usage from an application perspective

Example 1: Which application(s) cause my cluster usage (cpu, memory) to spike up?

Example 2: Are Queues being used optimally

Example 3: Are various Data sets actually being used?

Example 4: Need a comprehensive chargeback/showback view for planning/budgeting purposes - Not having good visibility and understanding when Data Pipelines miss SLAs? Where do we start to triage these issues?

Example 1: An Oozie orchestrated data pipeline that needs to complete by 4AM every morning is now consistently getting delayed. Completes by 6AM only - Unable to control/manage runaway jobs that could end up taking way more resources than needed effecting overall cluster and starving other applications

Example 1: Would need an automated way to track and manage these runaway jobs - Not a good understanding on what “knobs” (configuration parameters) to change at the cluster level to improve overall application performance and resource usage

- How to quickly identify inefficient applications that can be reviewed and acted upon?

Example 1: Most Ops team members do not have deep Hadoop Application expertise. So having a way to quickly triage and understand root-causes around application performance degradation is very helpful

The above application and operations challenges are real blockers preventing enterprise customers to make their Hadoop applications production ready. This in turn is slowing down the ROI enterprises are seeing from their Big Data investments.

Unravel’s vision is to address these broad challenges and more for modern data stack applications and operations. Our vision is to provide a full stack performance intelligence and self-serve platform that can improve your modern data stack operations, make your applications reliable and improve overall cluster utilization. In our subsequent blog posts we will detail on how we go about doing this and the (data) science behind these solutions.